By Nathan Burr, Senior Engineering Manager

As advertising dollars and viewers continue to move away from traditional television, content owners and broadcasters are looking to ad-supported live event streaming as a way to engage new audiences and grow revenue through over-the-top (OTT) streaming. OTT offers new distribution opportunities, which enable a publisher to air and monetize licensed content that previously had no place on broadcast television. But before a publisher can begin to stream live events, technical hurdles in the first step of the streaming video workflow must be considered:

- Connection quality and bandwidth availability between the venue and the ingest point can be a weak link in the workflow. Redundancy and reliability must be built in.

- Live feeds need to be encoded into any number of adaptive bit rate formats and protocols such as Apple HLS and MPEG-DASH while minimizing latency.

- Upload and encoding solutions must be future-proof, reliable, and scalable to handle the emergence of 4K and other high-quality formats.

- Video workflow should be able to support server-side ad insertion for the best viewer experience and most opportune monetization strategies.

In this technology blog, we look at how we’ve built our platform to enable a publisher to optimize the first step in the streaming video workflow, look at some of the challenges involved, and explain how we encode the live stream to enable high performance, low latency, ad-supported live streaming.

Background: The Slicer

Before we dive into the technical considerations of encoding, we need to explain our technology called the “Slicer.” A powerful software client, the Slicer on-ramps your venue’s live stream to our cloud video platform. It simplifies what is otherwise an extraordinarily complex task without sacrificing flexibility and functionality. The Slicer is a key reason why both broadcasters with extensive technical resources and those without any, can leverage our platform to create powerfully differentiated OTT experiences.

The Slicer prepares your content for encoding, calculates ideal encoding settings, and manages ad insertion markers. You have the flexibility to run the Slicer on your secure hardware or choose the cost savings and scalability of cloud-agnostic locations supporting a range of formats including SDI, IP video, RTP/FEC, and RTMP.

The Slicer chunks your content into small pieces and encrypts them before sending them to our ISO-certified cloud encoding stack, giving you the peace of mind that comes with knowing your content is always secure. It offers a flexible range of workflows, from simple one-click configurations to more advanced scripts in the programming language of choice to writing cloud functions that trigger workflows for notifications, job processing, and machine-learning integrations.

Our “Live Slicer,” a version of the Slicer, is optimized for live event streaming. HD-SDI or IP-based feeds are quickly ingested and chunked into 2-second or 4-second encrypted segments at the highest desired bitrate, reducing bandwidth requirements to 3–5 Mbps for 1080p and around 15 Mbps for 4K. Our process automatically retains in-band and out-of-band metadata and messaging to trigger program and ad breaks or replace content. Our plugin architecture allows you to create customized scripts that handle your unique signaling event requirements. Live Slicer can also listen to SCTE 35 / 104 messages or receive API calls to insert ad breaks, content starts or blackout triggers.

Minimizing bandwidth

Now that you understand the Slicer, you may be wondering why we would go to the effort of developing a front-end software component to assist with moving live streams from events to the cloud. Why couldn’t publishers send over an RTMP (Real-Time Messaging Protocol) stream, for instance? (You can do this if you want, but the vast majority of our customers take advantage of the Slicer.)

The answer has as much to do with consumer expectations for high-quality live streams as it does working around bandwidth challenges at live venues. It’s a matter of finding the right balance across a number of competing factors. On the one hand, you need to preserve as much of the original feed as possible, with an eye toward higher quality formats and 4K. On the other hand, you need to optimize the stream so it can be delivered efficiently without getting bogged down by additional overhead such as personalized advertising. Finding the right balance is critical for this step of the video workflow.

Here’s where the Slicer is essential. As noted above, it significantly reduces the bandwidth required for a given feed by creating the highest bitrate profile on site and only sending that profile to the cloud. In our observation — based on streaming millions of hours of live footage to billions of viewers around the world — the alternative approach of sending a significantly larger RTMP stream to the cloud doesn’t result in an appreciable increase in the quality of the viewing experience. But it does significantly increase bandwidth, which drives up cost.

Make no mistake, backhaul costs can add up quickly. If your requirements are such that you need a satellite uplink, a Ka-band truck, for example, rents for around $2,000 per day and bandwidth costs about $400 an hour. Given the inconsistent and bandwidth-constrained conditions at some venues, such as hotels or conference centers or even sporting venues around the globe, the bottom line is that it’s always a good idea to reduce upload bandwidth requirements to the greatest extent possible while ensuring you are delivering a broadcast-like experience to your viewers.

Encoding hurdles

Once the live video feed leaves the venue, the next step in the workflow is encoding. Here, a video encoder creates multiple versions or variants of the audio and video at different bitrates, resolutions, and quality levels. It then segments the variants into a series of small files or media segments. There are a number of additional steps that must be performed as well, such as creating a media playlist for each variant containing a list of URLs pointing to the variant’s media segments. The resulting master playlist is what the player uses to decide the most appropriate variant for the device and the currently measured or available bandwidth.

Two major video streaming protocols add to the complexity, and these, as well as others, may need to be supported to cover the myriad of potential playback devices. HLS is an HTTP-based media streaming communications protocol implemented by Apple and offers support for all Apple devices as well as most Google, Android, Linux, and Microsoft browsers and devices. Most but not all. For that, you also need MPEG-DASH, a competing HTTP-based media streaming protocol. You may also need to add in support for Microsoft Smooth Streaming for gaming consoles.

DRM can also complicate encoding by requiring its own set of multiple formats to support large audience requirements. For example, older players that don’t support DRM need HLS and AES-128. Older iOS devices require HLS and FairPlay. Newer iOS devices support HLS and FairPlay and CMAF CBC. Older Windows and Android only support CMAF CTR. Newer Android, Windows, and iOS should support all CMAF formats. This means your content must be packaged in multiple different formats to allow playback on all of those devices.

If this sounds like a lot of encoding work, you’re right. As resolutions increase and codecs get more complex, it becomes harder to encode a complete ABR encoding ladder on a single machine, whether that machine is located in the cloud or on-premise. If your encoding hardware isn’t up to the task of keeping up with the live feed, you may need to look at reducing the number of rungs on the encoding ladder, a move that ultimately could impact your audience’s experience.

To keep pace with more complex encoding requirements, the traditional model means that producers must continually invest in new hardware to maintain speed and quality. Ultimately, for a streaming service such as what Verizon Digital Media Services offers, the 1:1 stream to encoder model fails to deliver the reliability, flexibility, and scalability we need to meet our customers’ expectations.

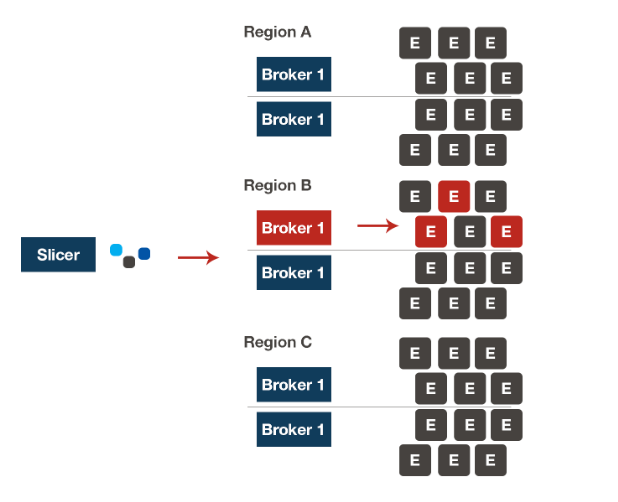

Instead, we have developed a sophisticated brokering system that allows the use of as many encoders as we need, all running in a cloud-based infrastructure. The brokering system receives the chunks of content from Slicer instances and moves them to the most optimal encoder available at the time. This action keeps encoding processes from overburdening a specific machine and keeps the chunks moving through the system to storage and out to viewers.

In our implementation, a broker instance acts as a manager talking between the Slicer and the encoders. The broker makes sure that any new Slicer gets its data routed to the proper encoder and verifies that the encoder can handle the workload. Additionally, we have a very capable scaling infrastructure. If we were to suddenly get dumped with a million hours of VOD content that needed to be encoded, we can ramp up server instances very fast and start processing content. We can also scale down just as quickly to conserve resources. This broker process manages our whole cloud infrastructure seamlessly and, more importantly, does it all automatically.

Stateless encoders

Of course, the brokering system would be of limited value if all it could do is point a Slicer to a single encoder that may or may not be able to keep up with the demands of the live stream, a serious problem with 4K. The solution we developed involves the use of stateless encoders. Instead of devoting a single machine to the entire stream, each encoder only receives a single 2- or 4-second segment of video at a time. Each segment includes enough information to prime the encoder so it can encode that segment and then discard anything that’s not needed as far as priming, such as lead-in, lead-out information. At this point, the full segment is completed and ready to go, freeing up the encoder so it can start encoding another piece of content from another channel or anything else.

In this model, there is also a considerable amount of redundancy built into the system. For instance, if an encoder crashes while processing a segment, the same segment starts up on another machine and gets done in time before there are any issues noticed within the stream.

This approach also allows the use of more cost-effective hardware. For example, if we know that a slower machine can take 8 seconds to process a 4-second file coming in from a Slicer we can spread the workload across multiple encoders as shown below: Server A gets slice 1; server B gets slice 2, and so on. Then, because they all complete at a predictable time interval, the chunks are delivered without any delivery issues. As shown in the chart below, this example would result in latency behind live of 16–20 seconds.

Ultimately, the volume of servers in the cloud — even if the individual servers themselves are slower — means that encoding processes can always keep up with live demands. If you wanted to set up an encoding infrastructure using a traditional model, you would need to invest in expensive high-performance machines or specialty hardware each capable of processing the entire incoming video without assistance in real time. By taking advantage of the scalability of the cloud, we significantly reduce encoding costs.

Another advantage of stateless cloud encoding is that we can easily move workloads to alternate cloud providers since we don’t have specialized server requirements. With a network approaching 100 Tbps of capacity, a multi-cloud approach offers inherent advantages.

Cost-effective live streaming

For live content producers, the technical considerations for preparing the live video for cloud streaming can present formidable obstacles. You’ll face a host of problems ranging from bandwidth limitations at venues to complex questions surrounding encoders and streaming protocols. Although it doesn’t eliminate the need for some connectivity at the venue, a simplified workflow with reduced bandwidth requirements on the front end can dramatically reduce upfront and ongoing expenditures while delivering the high-quality low-latency streams your viewers expect.

Visit verizondigitalmedia.com to learn more.